Extended reality for people with

serious mobility and verbal

communication diseases

Focus Area(s): People with serious disabilities

Scenario description:

Pilot 3 of the SUN Project marks a significant advancement in the development of human-centered rehabilitation technologies, specifically designed for individuals with severe motor and verbal communication disabilities. Unlike traditional uses of eXtended Reality (XR) that focus on entertainment or training, this pilot emphasizes emotional connection, social presence, and motivation. The main objective was to prove that, by leveraging intelligent sensing and immersive environments, people with profound physical impairments could regain agency communicating, making choices, and interacting meaningfully with others.

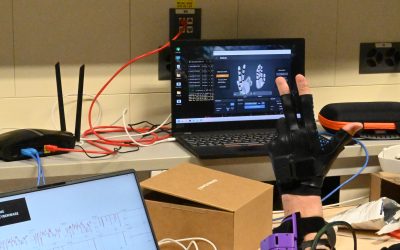

At the heart of the SUN XR platform is a sophisticated, non-invasive body-machine interface. This interface utilizes electromyography (EMG) to detect even minimal muscle contractions, such as those in the forearm often the only voluntary movement left in patients with tetraplegia or severe stroke. These muscle signals are decoded in real time and translated into virtual actions, like moving an avatar or grasping objects, enabling intuitive and expressive interaction without the need for traditional input devices.

To enhance the sense of immersion and embodiment, the system provides multisensory feedback. Users receive tactile cues through a vibrotactile armband, signalling when gestures are recognized or actions are completed. Additionally, thermal feedback allows users to feel temperature changes, such as the coolness of a glass or the warmth of a virtual handshake. This combination of visual, tactile, and thermal input makes virtual interactions feel more real and engaging.

Pilot 3 applied this integrated technology to two main clinical scenarios. The first scenario, Immersive Interaction for Individuals with Limited Mobility, was aimed at patients with incomplete spinal cord injuries or post-stroke motor deficits. The virtual environment, built with Unity, allowed users to perform everyday activities approaching objects, opening doors, or expressing basic needs using EMG control. For non-verbal individuals, a communication module enabled the creation of simple sentences, providing a much-needed avenue for social participation and emotional expression.

The second scenario addressed clinical apathy, a significant barrier in neurorehabilitation. Apathy, commonly seen after stroke or brain injury, reduces motivation to engage in therapy. The SUN team adapted an effort-based decision-making task into a VR format, where patients could earn emotionally relevant rewards, such as interacting with a loved one’s avatar or accessing enjoyable media. This scenario is being further enhanced with non-invasive brain stimulation to target deep reward circuits, aiming to boost motivation and engagement.

The SUN platform’s modular architecture includes an EMG decoding unit, haptic and thermal feedback modules, emotional-state monitoring sensors, and a central VR application that integrates all data streams in real time. Validation took place in two phases: technical validation with healthy volunteers at EPFL’s Campus Biotech in Geneva, and a clinical feasibility study at the Clinique Romande de Réadaptation in Sion, Switzerland. Participants found the system stable, intuitive, and highly immersive. Patients appreciated the comfort and motivational benefits, and clinicians noted its usability and potential to complement traditional therapy.

Additionally, the platform was tested for cybersecurity by simulating GPU-based malware attacks during XR sessions. The intrusion detection system successfully identified threats with no false alarms, demonstrating the feasibility of real-time security monitoring.

On YouTube SUN channel you can watch a video showing the validation performed in Versilia Hospital.

Technical challenges:

● Real Physical Haptic Perception Interfaces

● Non-invasive bidirectional body-machine interfaces

● Wearable sensors in XR

● Low latency gaze and gesture-based interaction in XR

● 3D acquisition with physical properties

● AI-assisted 3D acquisition of unknown environments with semantic priors

● AI-based convincing XR presentation in limited resources setups

● Hyper-realistic avatar

Impact:

Results of this scenarios will provide a radical new perspective in terms of human capital improvement in R$I allow excluded people to communicate their ideas while providing huge benefits for humans going to use that technology. The project is expected to deliver usable prototypes that will open the way for quick industrialisation addressing improvements of people’s quality of life, work and education.

Latest News

Pilot 3 Validation Phase Successfully Conducted at the CRR in Sion

The big day has finally arrived! The Pilot 3 validation phase of the SUN project took place at the Clinique Romande de Réadaptation (CRR) in Sion. This important milestone marked the culmination of several months of preparation and collaboration among all SUN...

SUN approach to improve motor control in incomplete tetraplegia

Incomplete tetraplegia, a partial paralysis affecting all four limbs due to cervical spinal cord injuries, is more common than complete tetraplegia in Europe. It significantly impairs upper limb motor function and daily activities. The SUN platform pilot project aims...

AI. XR and the patient perspective

1. Introduction Context AI-driven processes are transforming healthcare by enabling new diagnostic methods, personalizing treatments, improving administrative efficiency, and driving research innovations. At the same time, these benefits must be critically evaluated...

SUN Project Shines at HELT Symposium: Pioneering Human-Centered XR for One-Health

The EU Horizon SUN Project (Social and Human-Centered XR) took center stage at the Health, Law, and Technology (HELT) Symposium on April 24th, hosting a groundbreaking workshop titled “Social and...

SUN Project Pilot3 Phase one Completed at Campus Biotech, Geneva

From April 14–16, 2025, the SUN project team gathered at Campus Biotech in Geneva for a crucial milestone: the validation of the interactive system with healthy participants. The system allows users to control virtual environments through forearm EMG signals,...

Non-invasive brain stimulation (NIBS) techniques

Non-invasive brain stimulation (NIBS) techniques, such as transcranial magnetic stimulation (TMS) and transcranial direct current stimulation (tDCS), are increasingly being used in stroke rehabilitation to enhance recovery and neuroplasticity. NIBS promotes brain...

Pilot 3 final scenario assessment

On September 6, 2024, the solutions and the methodologies under development of SUN XR project related to pilot 3 have been shared with clinicians/therapists from our partners EPFL, particularly focusing on three pilot scenarios aimed at enhancing patient care....

Haptic glove in rehabilitation therapies

Virtual reality is revolutionizing the user experience in different fields, also through the integration of advanced haptic devices. Within the SUN project, Scuola Superiore Sant'Anna is developing these devices to enhance the user experience in immersive and...

The three pilots are ready for carrying out

All requirements and procedures needed to execute the experimentation in the different scenarios were carefully identified. The validation process will occur in two phases: the first phase will involve healthy individuals utilizing the initial release of the SUN...

Sensory perception and acceptance of prosthetic limbs

Researchers at EPFL in Switzerland have created a groundbreaking technology that allows amputees to experience the sensation of wetness through their prosthetic limbs. This innovation involves a sensor attached to the prosthetic hand connected to a stimulator that...

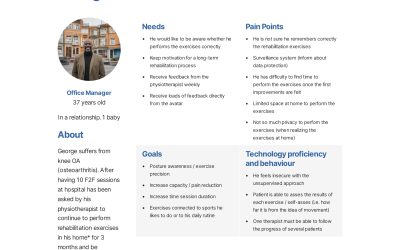

SUN XR User requirements

SUN user requirements have been finalised by KPEOPLE and IN2 (https://in-two.com/). The phases of co-creating user requirements went from personal interviews with the end users participating in the project (CRR-Suva, OAC, ASL-NO, FACTOR) to workshops with all partners...

Highly wearable haptic interfaces

The horizons of virtual reality are becoming increasingly more and more popular, embracing not only pure entertainment but also crucial industrial and rehabilitative sectors. Within the SUN XR project, the new challenges of virtual reality are materializing through...

SUN – VR Application for Cerebral Rehab after Stroke

Over 80% of stroke patients suffer in the acute phase from motor deficits with still 30-50% in the chronic phase. SUN XR Platform can have the potential to reach a large group of stroke patients, especially in the view of more the 1.5 million strokes per year in...

Towards a prosthetic hand

Involvement of end-users to ensure the usefulness of the solutions in Pilot 3

On January 22, we held our first SUN-XR stakeholder meeting at the Clinique romande de réadaptation. It was an excellent opportunity to bring together representatives of patients’ associations (@Fragile Suisse), clinicians and innovation specialists (@The ARK, @EPFL)....

First Pilot 3 External Stakeholders’ Meeting in Sion (CH)

The Role of external stakeholder is crucial to guarantee the "social" aspects of our project. In view of that in each pilot a stakeholders' group is going to be established. The Pilot 3 first stakeholders' meeting took place in Sion (CH), involving professionals,...

SUN-XR to help stroke patients to recover.

Annually, 15 million people are affected by a stroke worldwide. For a third of these patients, the stroke is fatal, while another third will suffer permanent disability. Rehabilitation process for the patients starts in the early days after the injury and represents a...