Extended reality for people with

serious mobility and verbal

communication diseases

Focus Area(s): People with serious disabilities

Scenario description:

Some people with various motor disabilities or after strokes have huge difficulties in communicating with each other and even to address their vital needs. The project will join the challenge to find a dedicated communication pathway for those people introducing the possibility to interact with some specific social cues and transform them in clear communication or actions. The proposal is to count on residual abilities giving them a meaning in terms of communication supported when needed by avatars in a virtual environment. The objective is to realise low-cost non-invasive tools based for instance on the existing biofeedback, face expression and other input. The challenge will be to create a solution allowing the design of person specific extended reality interaction even at home in the working place or at school and developing novel multi-user virtual communication and collaboration solutions that provide coherent multisensory experiences and optimally convey relevant social cues. Successful implementation of the pilot for this scenario allows people with communication and motor disabilities to interact with friends and relatives, meeting realistically in an extended (physical + virtual) environment. The person with communication and motor disabilities will be represented by an avatar which will interact with other people staying in a physical augmented environment. Simultaneously, the person with communication and motor disabilities will have the illusion to be also in the same physical environment with the other people, and can interact with the new interfaces offered by SUN. SUN, in fact, will develop a new generation of non-invasive bidirectional body-machine interfaces (BBMIs), which will allow a smooth and very effective interaction of people with different types of sensory-motor disabilities with virtual reality and avatars. The BBMI will be able to decode motor information by using arrays of printed electrodes to record muscular activities and inertial sensors. The position of the sensors will be customised according to the specific motor abilities of the subjects. For example, it could be possible to use shoulder and elbow movements, and it could be also possible to record muscular activities from auricular muscles. All these sensors will be used to record information during different upper limb and hand functions movements. We will use a procedure to identify the signals more useful for the different tasks and implement a dedicated decoding algorithm. This approach allowed developing a simplified and yet very effective approach to control flying drones and will probably provide very interesting results also in this case. A machine learning approach will be implemented for the decoding of the different tasks relying on human-machine interfaces and in general on decoding information from electrophysiological and biomechanical signals. SUN will also allow to give sensory feedback to the user about the movement and the interaction with the avatar in the virtual environment, using transcutaneous electrical stimulation or small actuators for vibration.

Technical challenges:

● Real Physical Haptic Perception Interfaces

● Non-invasive bidirectional body-machine interfaces

● Wearable sensors in XR

● Low latency gaze and gesture-based interaction in XR

● 3D acquisition with physical properties

● AI-assisted 3D acquisition of unknown environments with semantic priors

● AI-based convincing XR presentation in limited resources setups

● Hyper-realistic avatar

Impact:

Results of this scenarios will provide a radical new perspective in terms of human capital improvement in R$I allow excluded people to communicate their ideas while providing huge benefits for humans going to use that technology. The project is expected to deliver usable prototypes that will open the way for quick industrialisation addressing improvements of people’s quality of life, work and education.

Latest News

SUN approach to improve motor control in incomplete tetraplegia

Incomplete tetraplegia, a partial paralysis affecting all four limbs due to cervical spinal cord injuries, is more common than complete tetraplegia in Europe. It significantly impairs upper limb motor function and daily activities. The SUN platform pilot project aims...

AI. XR and the patient perspective

1. Introduction Context AI-driven processes are transforming healthcare by enabling new diagnostic methods, personalizing treatments, improving administrative efficiency, and driving research innovations. At the same time, these benefits must be critically evaluated...

SUN Project Shines at HELT Symposium: Pioneering Human-Centered XR for One-Health

The EU Horizon SUN Project (Social and Human-Centered XR) took center stage at the Health, Law, and Technology (HELT) Symposium on April 24th, hosting a groundbreaking workshop titled “Social and...

SUN Project Pilot3 Phase one Completed at Campus Biotech, Geneva

From April 14–16, 2025, the SUN project team gathered at Campus Biotech in Geneva for a crucial milestone: the validation of the interactive system with healthy participants. The system allows users to control virtual environments through forearm EMG signals,...

Non-invasive brain stimulation (NIBS) techniques

Non-invasive brain stimulation (NIBS) techniques, such as transcranial magnetic stimulation (TMS) and transcranial direct current stimulation (tDCS), are increasingly being used in stroke rehabilitation to enhance recovery and neuroplasticity. NIBS promotes brain...

Pilot 3 final scenario assessment

On September 6, 2024, the solutions and the methodologies under development of SUN XR project related to pilot 3 have been shared with clinicians/therapists from our partners EPFL, particularly focusing on three pilot scenarios aimed at enhancing patient care....

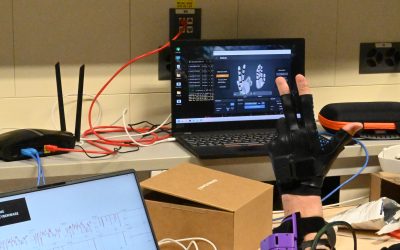

Haptic glove in rehabilitation therapies

Virtual reality is revolutionizing the user experience in different fields, also through the integration of advanced haptic devices. Within the SUN project, Scuola Superiore Sant'Anna is developing these devices to enhance the user experience in immersive and...

The three pilots are ready for carrying out

All requirements and procedures needed to execute the experimentation in the different scenarios were carefully identified. The validation process will occur in two phases: the first phase will involve healthy individuals utilizing the initial release of the SUN...

Sensory perception and acceptance of prosthetic limbs

Researchers at EPFL in Switzerland have created a groundbreaking technology that allows amputees to experience the sensation of wetness through their prosthetic limbs. This innovation involves a sensor attached to the prosthetic hand connected to a stimulator that...

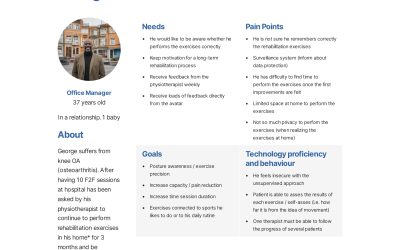

SUN XR User requirements

SUN user requirements have been finalised by KPEOPLE and IN2 (https://in-two.com/). The phases of co-creating user requirements went from personal interviews with the end users participating in the project (CRR-Suva, OAC, ASL-NO, FACTOR) to workshops with all partners...

Highly wearable haptic interfaces

The horizons of virtual reality are becoming increasingly more and more popular, embracing not only pure entertainment but also crucial industrial and rehabilitative sectors. Within the SUN XR project, the new challenges of virtual reality are materializing through...

SUN – VR Application for Cerebral Rehab after Stroke

Over 80% of stroke patients suffer in the acute phase from motor deficits with still 30-50% in the chronic phase. SUN XR Platform can have the potential to reach a large group of stroke patients, especially in the view of more the 1.5 million strokes per year in...

Towards a prosthetic hand

Involvement of end-users to ensure the usefulness of the solutions in Pilot 3

On January 22, we held our first SUN-XR stakeholder meeting at the Clinique romande de réadaptation. It was an excellent opportunity to bring together representatives of patients’ associations (@Fragile Suisse), clinicians and innovation specialists (@The ARK, @EPFL)....

First Pilot 3 External Stakeholders’ Meeting in Sion (CH)

The Role of external stakeholder is crucial to guarantee the "social" aspects of our project. In view of that in each pilot a stakeholders' group is going to be established. The Pilot 3 first stakeholders' meeting took place in Sion (CH), involving professionals,...

SUN-XR to help stroke patients to recover.

Annually, 15 million people are affected by a stroke worldwide. For a third of these patients, the stroke is fatal, while another third will suffer permanent disability. Rehabilitation process for the patients starts in the early days after the injury and represents a...