eXtended reality for Rehabilitation

Focus Area(s): Smart living: rehabilitation, ergonomics, postural analysis, exercising

Scenario description:

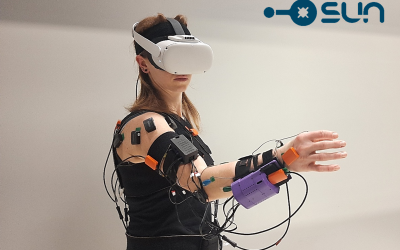

The aim of the proposed rehabilitation scenario is to motivate the patient to exercise efficiently by providing feedback in relation to performance, while the physiotherapy exercises are performed in any setting, e.g. clinical, at home, indoors, outdoors or even in public areas. This scenario is based on the use of a digital tool employing VR,AR, and MR to assist and monitor the individual motor learning in the context of a supervised personalised remote exercise rehabilitation program for the management of injuries/pathologies. The digital tool will also enable supervised personal training. Important success factors for home-based programs is to include patients with a favourable prognosis and increasing adherence to the program. The main goal of this scenario is to improve compliance to the physiotherapy protocol, increase patient engagement, monitor physiological conditions and provide immediate feedback to the patient by classifying an exercise in real-time as correctly or incorrectly executed, according to physiotherapists’ set criteria. It is already known that visuo-physical interaction results in better task performance than visual interaction alone tracking performance. Wearable haptics (such as EMG) can be used to enhance physical interaction monitoring body contextual information. Visual AI algorithms can enhance the understanding of the alignment of the body, capturing the outline and giving real-time personalised feedback to improve the quality of the movement. Rehabilitation adherence and fidelity are especially challenging, alongside motor learning with personalised feedback. Physiotherapy after orthopaedic surgery and other kinds of upper limb motor impairment such as “frozen shoulder” or severe hand arthritis is crucial for complete rehabilitation but is often repetitive, tedious and time-consuming. Actually, in order to achieve a motor recovery a very long physiotherapy treatment, sometimes more than 50 sessions, is needed. Therefore, there is a necessity to suitably address the evidence-practice gap and translate digital innovations into practice while enabling their improvement, enhancing accuracy as well as portability in the home environment. Starting from a visual representation of an exercise, delivered through an avatar of the therapist performing it in the correct way, the patient will be called to repeat it, or in sync with the avatar performing the exercise. Wearables and wireless Sensors will be used to measure and deliver contextual information; starting from multiple IMUs (dynamic motion, body and limb orientation kinetics & kinematics, maximum respective joint angle achieved), SEMG (neuromuscular potentials such as activation and fatigue) and smartwatch (heart rate, oxygen saturation, arterial blood pressure). Besides an accelerometer, a flex sensor could be employed instead of a gyroscope for specific angle measurement during limb bending and a force sensitive sensor to measure limb pressure, with the potential of classifying a variety of rehabilitation exercises over a sensor network. Data from a camera and the sensors will be collected in real time and send to a GPU server (either cloud based exploiting the potential of cloud-based AI infrastructure or edge based exploiting edge AI). An AI algorithm will process the sensory input, responding to the level of muscle activation by reducing or enhancing the exercise difficulty-intensity, and to the movement quality providing personalised feedback for movement correctness. Clinician’s feedback can also be added at any point during the automated feedback to enhance accuracy, secure safety and further improve the algorithm. Usability testing is required a priori to the application of such complex innovative digital tools. The goal of this scenario is to also increase user engagement for diverse populations. These can be achieved by the notion of personalization for diverse populations and rehabilitation needs and abilities. An iterative-convergent-mixed-methods design will be used to assess and mitigate all serious usability issues and to optimize user experience and adoption. This design will provide transparency and guidance for the development of the tool and its implementation into clinical pathways. The methodological framework is defined by the ISO 9241-210-2019 (Human-centred design for interactive systems) constructs, effectiveness, efficiency, satisfaction, accessibility.

Technical challenges:

:

● Real Physical Haptic Perception Interfaces

● Non-invasive bidirectional body-machine interfaces

● Wearable sensors in XR

● Low latency gaze and gesture-based interaction in XR

● 3D acquisition with physical properties

● AI-assisted 3D acquisition of unknown environments with semantic priors

● AI-based convincing XR presentation in limited resources setups

● Hyper-realistic avatar

Impact:

This use case aims to highlight the integration of innovative technologies (multimodal sensors, AI technology, XR, smart appliances) into rehabilitation and supervised exercising under specialist feedback/supervision in the home environment, in order to achieve a higher compliance level of the participants. Real-time monitoring through camera, sensors and wearables, XR (avatars), augmented visual feedback, AI algorithm and therapist’s perspective are all innovatively combined and delivered to the patient through the most familiar means, a smartphone or any other smart appliance, aiming for the increased compliance level. The successful result of this use case will allow planning a fully integrated XR approach into remote rehabilitation and exercising, using full body data. This aims to be the future of remote rehabilitation and exercising for special populations.

Latest News

How can rehabilitation be more effective and engaging?

At Scuola Superiore Sant'Anna in Pisa, innovative solutions are developed to enhance motor recovery through haptic devices and soft materials, leveraging advanced human-machine interaction. One such innovation is a wearable haptic device that integrates...

Revolutionizing Cancer Care: XR’s Role in Holistic Rehabilitation

For individuals recovering from cancer, the journey doesn’t end with the final treatment session. Many face lingering physical, emotional, and mental challenges that can feel overwhelming. At Outdoor Against Cancer (OAC), we’ve long championed the importance of...

OAC vision of SUN from the Patient’s Perspective

The Patient’s Perspective: How Virtual Reality and Remote Therapy Can Revolutionise Rehabilitation For many patients, rehabilitation is a vital part of their journey toward recovery. Whether recovering from a stroke, injury, cancer treatment, or managing chronic...