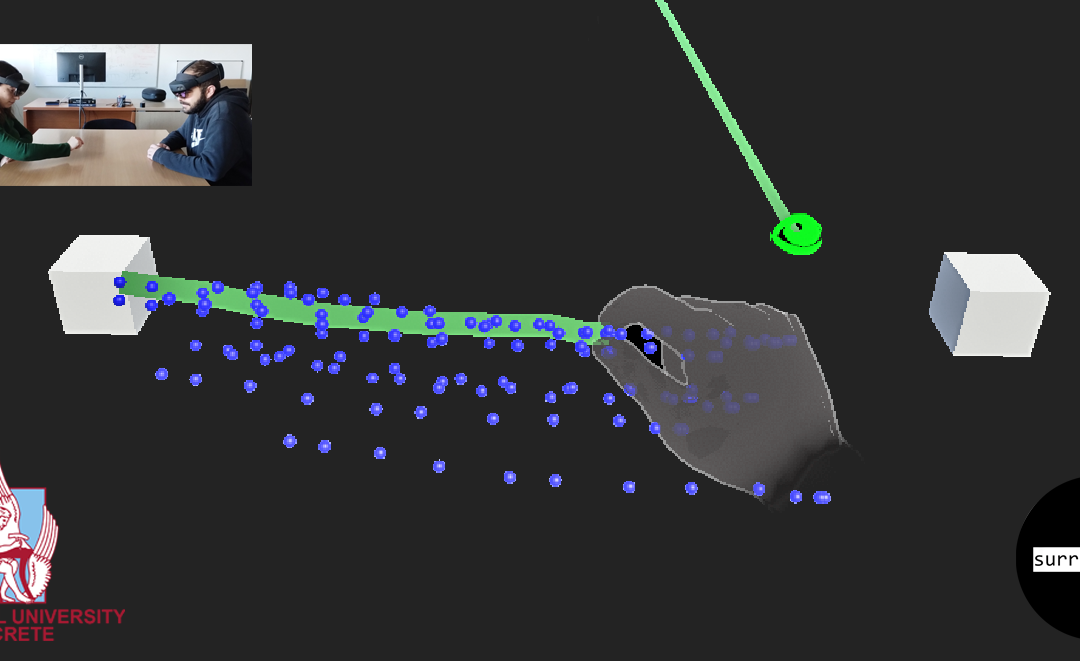

The core of XR collaboration is not just sharing a virtual space, but enhancing how users interact within it. The visualization of communication cues, such as hand and gaze, is critical in intuitively conveying the intentions and actions of collaborators, thus, facilitating seamless interaction. By leveraging the temporal and spatial characteristics of visual cues such as hand trajectories, gaze direction, or virtual pointers, we significantly enhance situational awareness and task efficiency within the shared XR space.

In the SUN project, TUC is developing collaboration paradigms that focus on real-time visualization of these cues within the field of view of XR devices. The objective is to optimize the display of essential information regarding a collaborator’s spatial reference or intended actions without overwhelming the user’s cognitive load. This balance is key to maintaining immersion and effectiveness in collaborative tasks, ensuring that each participant is aware of collaborator’s intentions, all within the user’s field of view.